Future Directions

Workshop: Advancing

the Next Scientific

Revolution in

Toxicology

April 28-29,2022

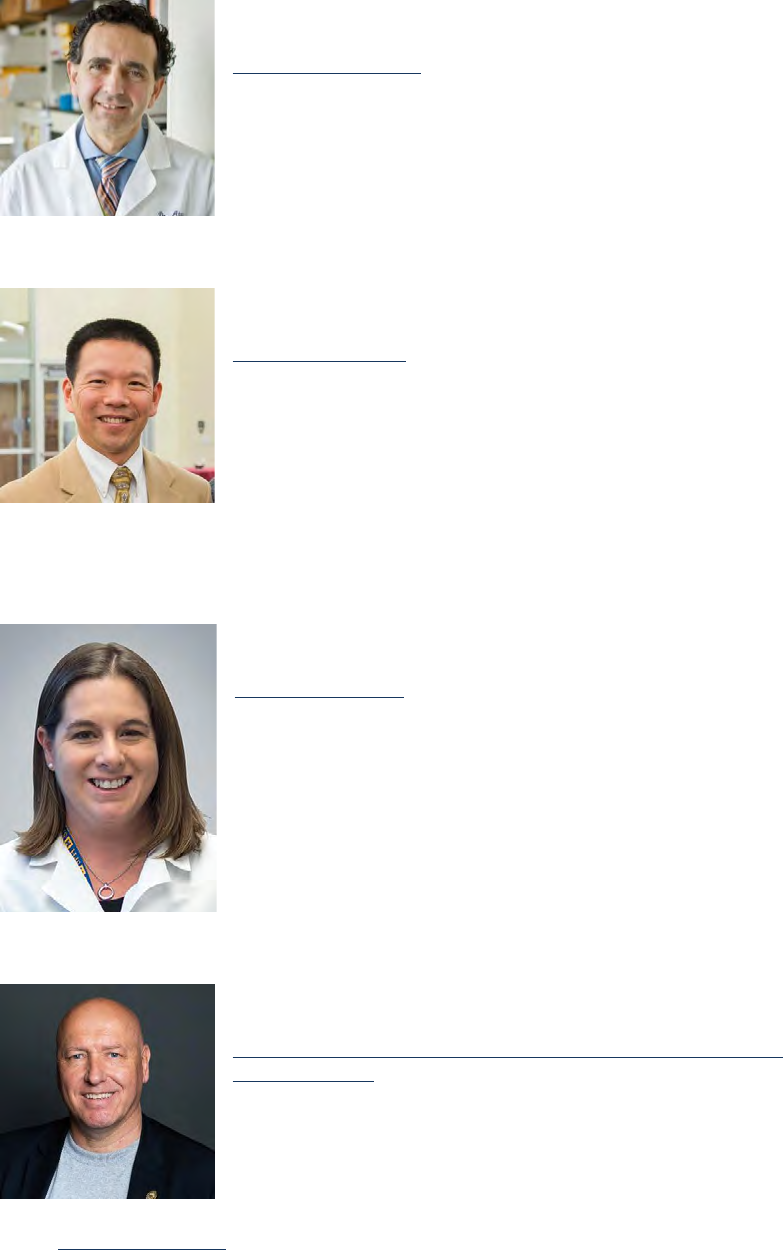

Thomas Hartung, Johns Hopkins University, University of Konstanz,

and Georgetown University

Ana Navas-Acien, Columbia University

Weihsueh A. Chiu, Texas A&M University

Prepared by:

Kate Klemic, Virginia Tech Applied Research Corporation

Matthew Peters, Virginia Tech Applied Research Corporation

Shanni Silberberg, Ofce of the Under Secretary of Defense

(Research & Engineering), Basic Research Ofce

Future Directions Workshop series

Workshop sponsored by the Basic Research Ofce, Ofce of

the Under Secretary of Defense for Research & Engineering

CLEARED

For Open Publication

Department of Defense

OFFICE OF PREPUBLICATION AND SECURITY REVIEW

Apr 26, 2023

ii

Contents

Preface iii

Executive Summary 1

Introduction 4

Toxicology Research Challenges 6

Toxicology Research Advances and Opportunities 8

Toxicology Research Trajectory 15

Accelerating Progress 19

Conclusion 20

Glossary 21

Bibliography 22

Appendix I—Workshop Attendees 27

Appendix II—Workshop Agenda and Prospectus 34

iii

Innovation is the key

to the future, but basic

research is the key to

future innovation.

– Jerome Isaac Friedman,

Nobel Prize Recipient (1990)

Preface

Over the past century, science and technology has brought

remarkable new capabilities to all sectors of the economy,

from telecommunications, energy, and electronics to medicine,

transportation and defense. Technologies that were fantasy

decades ago, such as the internet and mobile devices, now

inform the way we live, work, and interact with our environment.

Key to this technological progress is the capacity of the global

basic research community to create new knowledge and to

develop new insights in science, technology, and engineering.

Understanding the trajectories of this fundamental research,

within the context of global challenges, empowers stakeholders

to identify and seize potential opportunities.

The Future Directions Workshop series, sponsored by the

Basic Research Directorate of the Ofce of the Under Secretary

of Defense for Research and Engineering, seeks to examine

emerging research and engineering areas that are most likely to

transform future technology capabilities. These workshops gather

distinguished academic researchers from around the globe

to engage in an interactive dialogue about the promises and

challenges of each emerging basic research area and how they

could impact future capabilities. Chaired by leaders in the eld,

these workshops encourage unfettered considerations of the

prospects of fundamental science areas from the most talented

minds in the research community.

Reports from the Future Direction Workshop series capture

these discussions and therefore play a vital role in the discussion

of basic research priorities. In each report, participants are

challenged to address the following important questions:

• How will the research impact science and technology

capabilities of the future?

• What is the trajectory of scientic achievement over the next

few decades?

• What are the most fundamental challenges to progress?

This report is the product of a workshop held April 28-29, 2022, at

the Basic Research Innovation Collaboration Center in Arlington,

VA on the future of toxicology research. It is intended as a

resource for the S&T community including the broader federal

funding community, federal laboratories, domestic industrial

base, and academia.

1

Executive Summary

1 hps://pubmed.ncbi.nlm.nih.gov/20574894/

In the nearly two decades since the human genome

was sequenced, the eld of toxicology has undergone a

transformation, taking advantage of the explosion in biomedical

knowledge and technologies to move from a largely empirical

science aimed at ensuring the absence of harmful effects to a

mechanistic endeavor aimed at elucidating disease etiology

based on an understanding of the biological responses to

chemicals (including biochemistry) and the impact on organ

systems. However, a substantial gap remains between the

promise of mechanistic toxicology and its actual impacts on

improving human health. Toxicology continues to work in a

largely reductionist paradigm of single endpoints, chemicals,

and biological targets, whereas it is known that biology and

pathobiology involve complex interactions across each of these,

with the additional recognition that social stressors also have

biological consequences. At the same time, the pace of scientic

and technical advances has resulted in a deluge of models and

data for understanding toxicological exposure, hazard, and

risk that is increasingly challenging to evaluate, integrate and

interpret. A critical need, therefore, exists to understand how to

leverage these new frontiers in toxicology to achieve the desired

long-term impact of improving human health. This fundamental

problem addresses the question of what exposures, now or

in the future, can contribute to disease and calls for a Human

Exposome Project.

The 2007 National Research Council report on Toxicity Testing

for the 21st Century—a Vision and a Strategy

1

(Tox-21c) was a

watershed moment for US toxicology, changing the discussion

from whether to change to when and how to change. With

knowledge in the life sciences doubling every seven years since

1980 and every 3.5 years since 2010, as well as publications

doubling every fteen years (Bornmann, 2021; Densen, 2011), we

now have about 16 times as much knowledge and twice as many

publications as in 2007.

The Future Directions in Toxicology Workshop convened on April

28-29, 2022, in Arlington, VA, to examine research challenges

and opportunities to usher toxicology into a new paradigm

as a predictive science. Hosted by the Basic Research Ofce

in the Ofce of the Under Secretary of Defense for Research

and Engineering, this workshop gathered 20 distinguished

researchers from across academia, industry, and government

to discuss how basic research can advance the science of

toxicology. The workshop aimed at the next generation of a

vision for toxicology, “Toxicity Testing for the 21st Century

2.0—Implementation” that extends the vision of the 2007 report

and adapts it to scientic and technological progress. This report

is the product of those discussions, summarizing current research

challenges, opportunities, and the trajectory of toxicological

science for the next twenty years.

The vision developed at the workshop foresees toxicology

developing into a Human Exposome Project that better

integrates the exposure side of disease, focusing on real-

world exposures affecting diverse populations over time. Thus,

changing the principal approach from a hazard-driven to an

exposure-driven paradigm. This new paradigm identies the

relevant human or ecological exposures and then bases the

risk assessment process on exposomics, forming an exposure/

mechanism hypothesis from the multi-omics imprint in biouids

and tissues, and biomonitoring, the large-scale sampling

and measurement of biospecimen. This new paradigm also

incorporates negligible exposures and is focused on ensuring

safety instead of predicting toxicity. Another critical aspect of this

paradigm is the inclusion of disruptive research technologies,

such as microphysiological systems, the bioengineering of

organ architecture and functionality to model (patho-)physiology,

and articial intelligence/machine learning to process the

complex data generated for informed decisions. Ultimately, we

need to integrate the evidence provided by these technologies,

especially through probabilistic risk assessment. An evidence-

based toxicology approach ensures condence and trust in the

process by which scientic evidence is assessed for the safety of

chemicals for human health and the environment.

The workshop was organized around three key areas that are

likely to transform toxicology: 1) employing an exposure-driven

approach, 2) utilizing technology-enabled techniques, and 3)

embracing broad-scale evidence integration. These three key

areas are expected to have a huge impact on the development

of three key long-term public health goals: 1) Precision Health,

2) Targeted Public Health Interventions and Environmental

Regulations and 3) Safer Drugs and Chemicals, through their

distinct perspectives and long-term goals.

Exposure-driven Toxicology

Exposure-driven assessments were not covered in the 2007

Tox-21c report and were only the subject of a parallel NRC

report, but the needs for integration into toxicology, for example

through exposomics, are increasingly evident. Exposure-

driven toxicology, focused on real-world exposures and gene-

environment interactions that affect diverse populations can

contribute to addressing the three aims identied during the

workshop: 1) precision health through the identication of

environmental exposures for improved health outcomes in

specic populations, 2) targeted public health interventions and

environmental regulations to address those environmentally-

driven health outcomes and 3) the identication of safer drugs

and chemicals. Precision health aims for individual, personalized

preventive interventions, and pharmaceutical and non

pharmaceutical therapies. Targeted public health Interventions

and environmental regulations must address population and

spatial-temporal variability in genome and epigenome, as well as

exposome. Safer drugs and chemicals shall be attained through

in vitro/in silico chemical screening, in vitro/in silico clinical trials

and identifying intrinsic and extrinsic susceptibilities.

2

Workshop participants envision a Tox-21c 2.0 that reects real-

world based exposure designs (in silico, cellular, organoids,

models, organisms, longitudinal epidemiological studies). It will

include population-scale measurements that are based on readily

available biobanks and ecobanks that inform on the distribution

of thousands of chemical and non-chemical stressors in relevant

populations (general population, relevant subgroups, disease

cohorts). Noteworthy, the exposomics approach potentially

can interrogate all types of stressors, not just chemicals, that

actually perturb biology and change biomarkers in body uids.

Study designs and computational approaches will be aligned to

provide interpretable and actionable results. Ethical issues, policy

implications, community engagement, and citizen participation

will keep pace with and inform the technology, rather than being

exclusively reactive to the technology. In the near-term, a critical

rst implementation step for exposure-driven toxicology and

precision health is to scale-up mass spectrometry technology for

high-quality inexpensive assessment of thousands of chemicals

that can be tagged to exogenous exposures including non-

chemical stressors. Libraries that tag key information for those

chemicals (metadata layering) will need to be expanded and

developed to facilitate interpretation, and to guide preventive

strategies, interventions, and policy recommendations. In the

mid-term, technologies will be required that link the exposome

with health outcomes, and leverage longitudinal studies and

biobanks retrospectively and prospectively, ensuring “FAIR”-ness

(Findability, Accessibility, Interoperability, and Reuse of digital

assets)

2

. In the long-term (20 years), we envision that exposome-

disease predictions and exposome-targeted prevention, and

treatment solutions will become part of the toxicology and

public health practice landscape, leveraging also other ~omics

technologies, genomic information, and clinical characteristics.

Technology-enabled Toxicology

The workshop participants discussed technological advances

over the last 10-15 years of great relevance to toxicology in

three key areas: cell and tissue biology, bioengineering, and

computational methods. Workshop participants noted that

while biological technologies, such as stem cell engineering,

have emerged as routine, commercial enterprises for

biomedical research, their potential in toxicology could be

further expanded through a) reliable and genetically-diverse

cell sourcing, b) improved protocols to differentiate patient-

derived stem cells into adult cell phenotypes across essential

tissues, c) integrative and non-invasive biomarkers, d) integration

of dynamic physiology and pathophysiology outcomes, e)

population heterogeneity and susceptibility through life-courses,

and f) biological surrogates for non-chemical stressors. On

the bioengineering side, workshop participants noted that

technological capabilities, such as microphysiological systems

(MPS), have shown many successes in the laboratory but need

to be further developed to 1) include a variety of models of

increasing architectural complexity (monolayer/suspension

cultures, organoids and multi-organoid systems) for different

stages of drug/chemical development, 2) better represent

healthy and diseased populations by a personalized multiverse

2 hps://www.go-fair.org/fair-principles/

of possible futures, 3) codify platform standardization, 4)

increase throughput, 5) demonstrate validation against in vivo

outcomes, 6) incorporate perfusion and biosensors with near

real-time outputs, and 7) develop automated fabrication. In

addition, the workshop participants noted that the emergence

of “big data” and “big compute” has revolutionized much of

biology, through the ability to analyze and interpret complex and

multi-dimensional information. Computational capabilities and

models are of utmost importance for toxicology, serving as the

key enabling technology. For instance, AI/Machine Learning has

emerged as a key technology to support data mining, predictive

modeling, hypothesis generation, and evidence interpretation

(e.g., explainable AI). Data acquisition and data-sharing following

the FAIR principles is key to unleashing these opportunities. The

emergence of these needs in toxicology necessitates widespread

use and understanding of these technologies combining

them with expert knowledge to yield augmented intelligence

workows. Moreover, given the quantity of information generated

and consumed by these new technologies, the workshop

participants agreed that there is a need for comparable,

compatible, integrable multi-omic databases, quantitative in vitro

to in vivo extrapolation, and the development of in silico “digital

twins” of in vitro and in vivo systems.

Evidence-integrated Toxicology

Workshop participants discussed the key challenge of integrating

data and methods (evidence streams) in test strategies,

systematic reviews, and risk assessments. They agree that

evidence-based toxicology and probabilistic risk assessments are

emerging solutions to this challenge. Evidence integration across

evidence streams (epidemiological, animal toxicology, in vitro, in

silico, non-chemical stressors, etc.) is expected to play a key role

in translating evidence into knowledge that can inform decision-

making. The group developed a vision to conduct complex

rapid/real-time evidence integration by combining advancements

made in data-sharing, and application of articial intelligence

(e.g., natural language processing), with the transparency

and rigor of systematic reviews. To implement this vision, the

workshop participants identied a need for collaborative, open

platform(s) to transparently collect, process, share, and interpret

data, information, and knowledge on chemical and non-chemical

stressors. Creating these platforms is foundational for rapid and

real-time evidence integration and will empower all steps of

protection of human health and the environment. Several needs

were identied to create this platform: 1) software development

to create dynamic and accessible interfaces, 2) denitive

standards and key data elements to facilitate analysis of meta-

data and automated annotation, and 3) consideration for quality

control.

In conclusion, the workshop advocates a paradigm shift

to “Toxicology 2.0” based on the evidence integration of

emerging disruptive technologies, especially exposomics,

microphysiological systems, and machine learning. To date,

exposure considerations typically follow the identication of a

hazard. Future Tox-21c 2.0 must be guided by the identication

3

of relevant exposures through exposomics. The adaptation to

technical progress, especially microphysiological systems and AI,

requires harmonization of reporting and quality assurance. The

key challenge lies in the integration of these different evidence

streams. evidence-based medicine can serve as a role model with

systematic reviews, dened data search strategies, inclusion and

exclusion criteria, risk-of-bias analysis, meta-analysis, and other

evidence synthesis approaches. While this is mostly applicable

to existing data and studies, a new challenge is the prospective

application for the composition of test strategies (Integrated

Test Strategies—ITS, Integrated Approached to Testing and

Assessment—IATA, and Dened Approaches—DA). A key role for

Probabilistic Risk Assessment was also identied. The participants

also emphasized the need to ensure validation of these new

approaches, as well as expand training, communication, and

outreach. Ultimately, it calls for expanding the approach to a

Human Exposome Project.

4

Introduction

In 1983, the US National Research Council (NRC) Committee

on the Institutional Means for Assessment of Risks to Public

Health published a foundational study titled “Risk Assessment

in the Federal Government: Managing the Process” (NRC,

1983), commonly referred to as the Redbook. As Lynn

Goldman put it, the Redbook “has created a framework for

incorporation of toxicology into environmental decision-making

that has withstood the test of time” (Goldman, 2003). It was

complemented by the 2009 report “Science and Decisions:

Advancing Risk Assessment,” aka the Silverbook (NASEM,

2009), which highlighted some challenges in the process.

Parallel work by another NRC committee resulted in the 2007

NRC report on “Toxicity Testing in the 21st Century” (NRC,

2007), or Tox 21c, which developed “a vision and a strategy” to

transform toxicological sciences. The gap analysis of the report

has not really changed, as toxicology is still “time-consuming

and resource-intensive, it has had difculty in meeting many

challenges encountered today, such as evaluating various life

stages, numerous health outcomes, and large numbers of

untested chemicals” and needs “to use fewer animals and

cause minimal suffering in the animals used”. The 2007 report

was complemented by the NRC reports “Exposure Science in

the Twenty-rst Century: A Vision and a Strategy” (NRC, 2012)

and NASEM (2017a) “Using 21st Century Science to Improve

Risk-Related Evaluations.” The Tox-21c and subsequent

reports have changed the debate about safety and risk

assessment of substances in the US and beyond, and led to a

remarkable number of initiatives and programs (Krewski 2020).

The resulting diversity in approaches combined with an ever-

accelerating availability of disruptive technologies calls for a

re-conceptualization of the future of toxicology. The way forward

is to dissolve the dichotomy of hazard and exposure sciences,

embrace the disruptive technological advances, and foster

evidence integration from these evidence streams.

In the nearly two decades since the human genome

was sequenced, the eld of toxicology has undergone a

transformation, taking advantage of the explosion in biomedical

knowledge and technologies to move from a largely empirical

science aimed at ensuring the absence of harmful effects to a

mechanistic endeavor aimed at elucidating disease etiology

and biological response pathways induced by exposures.

However, a substantial gap remains between the promise of

mechanistic toxicology and the actualization of the eld as a

predictive science. For instance, high-throughput in vitro and

in silico toxicity testing remains largely focused on prioritization

of individual chemicals for future investigation allowing to

focus limited resources on the one hand, but which may on the

other hand provide a false sense of safety for “de-prioritized”

chemicals. Specically, these efforts, as well as those aimed at

translating such data into hazard or risk have been hampered

by inadequate coverage of important biological targets given

the limitations of current in vitro methods to simulate in vivo

metabolism or predict effects in different tissues and across

different life stages (Ginsberg 2019), inadequate consideration

of population heterogeneity, and aiming still to provide

assurances of safety rather than quantication of effects across

the population. Furthermore, there has been little progress in

understanding the complex interactions among chemicals and

between chemicals and other intrinsic and extrinsic factors that

affect population health, such as genetics and non-chemical

stressors, including marginalization and other social determinants

of health.

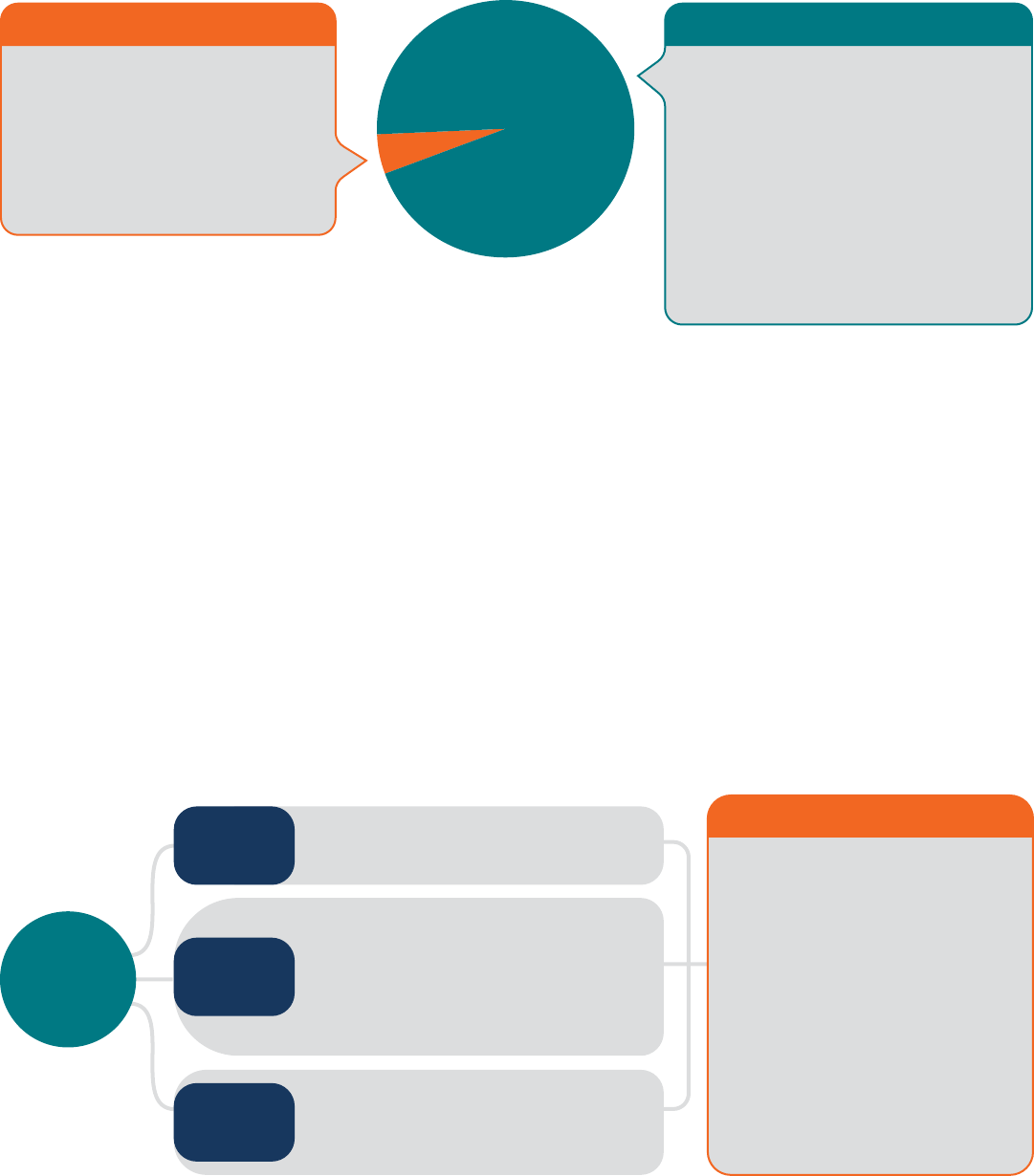

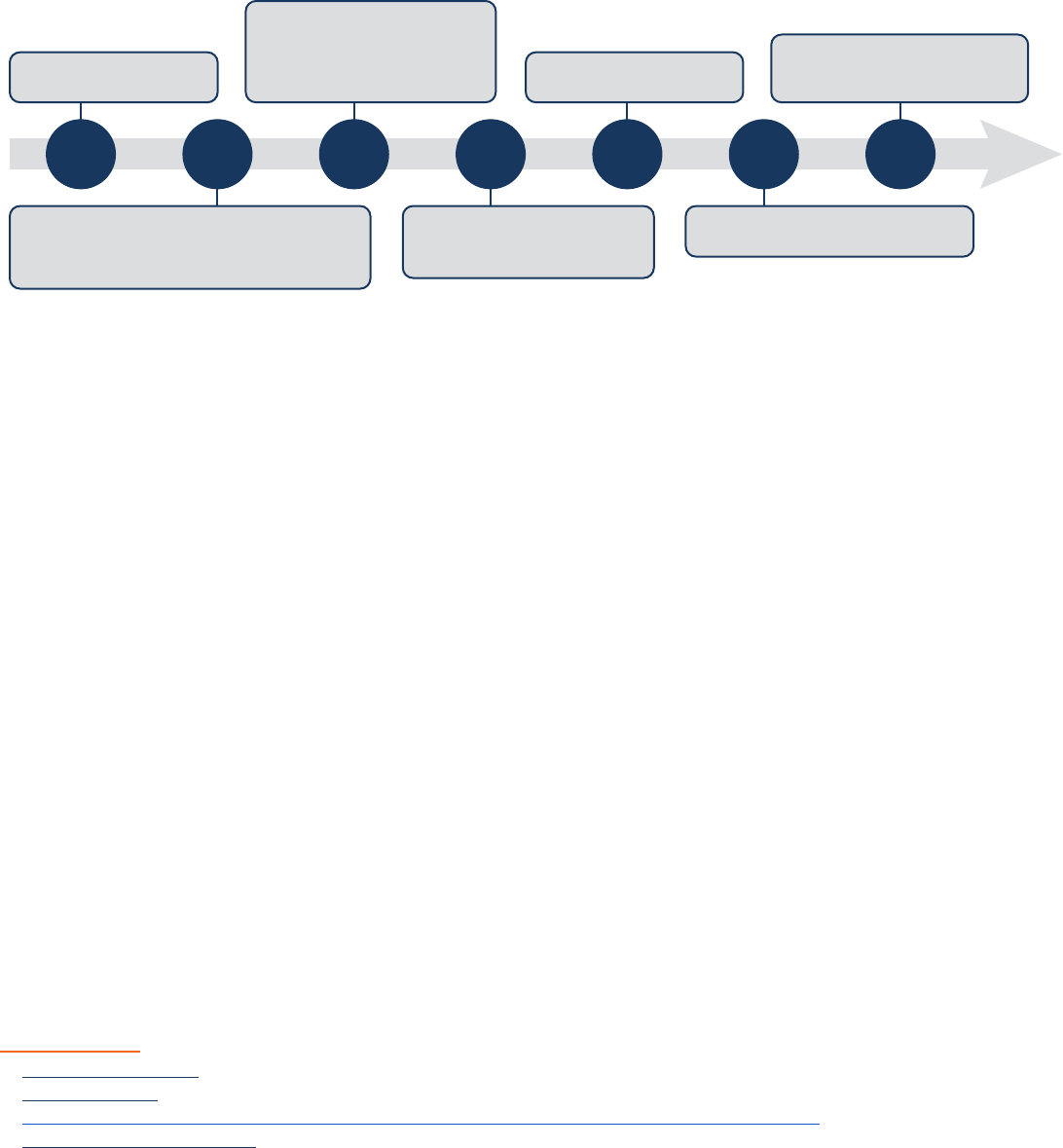

In practice, toxicology largely remains a process based

on reductionist paradigm (Figure 1, left side), classifying

individual chemicals for individual hazards, and investigating

simplistically “linear” mechanistic pathways based on

individual biological targets. Although signicant research and

development investment has been made in improving the

throughput of toxicology through the advent of in vitro and in

silico technologies, the vast majority of these efforts to make

toxicity testing faster, cheaper, and perhaps more relevant are

still fundamentally “one at a time” approaches that feed into

“one at a time” risk assessments and ultimately “one at a time”

decisions. Thus, they ultimately only address a narrow slice of the

human-relevant experiences of toxicity, where 1) all exposures

are time-dependent mixtures of chemical and non-chemical

stressors, 2) every individual has unique susceptibilities and

baseline conditions, and 3) multi-factoral, multi-causal outcomes

are the norm (Figure 1).

5

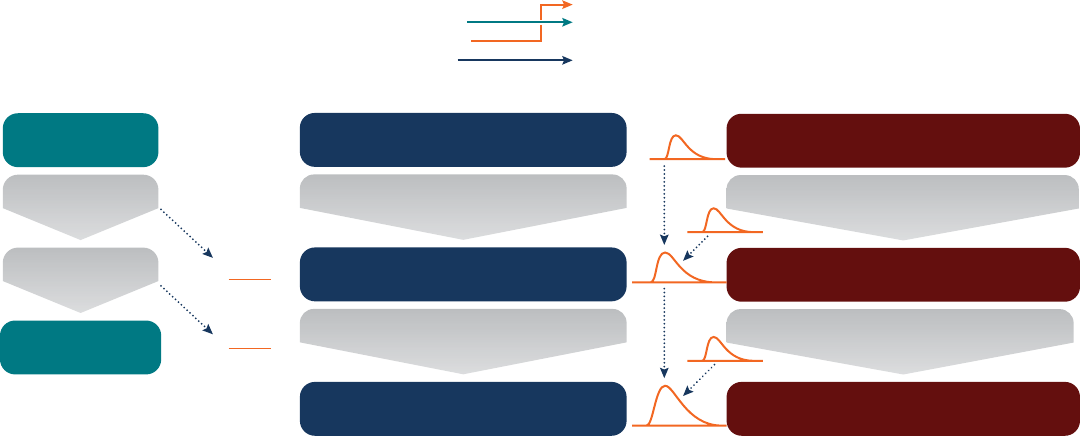

This report advocates for a fundamental shift to a holistic

paradigm where toxicology embraces complexity rather than

sweeping it under the rug. Against this backdrop, the workshop

was organized around three main research areas (Figure 2) that

are key to enabling this paradigm shift.

First, whereas both traditional mammalian toxicity testing, and

high-throughput screening assays largely focus on one chemical/

mechanism/outcome at a time, this paradigm shift envisions

toxicology to be exposure-driven, addressing real-life exposure

scenarios in which multiple agents, including social determinants

of health, work together to affect multiple mechanistic pathways

and health outcomes. Additionally, this paradigm shift requires

replacing individual assays that are genetically/epigenetically/

exposomically homogeneous with multiplexed systems that

incorporate inter-cell/tissue interactions on a backdrop of

population variability. Thus, toxicology will become Technology-

enabled, leveraging technological advances from genetics to

bioengineering to enable the characterization of toxicity in

integrated in vitro/in silico platforms across the landscape of

genomics, epigenomics, life-stage, and non-chemical stressors.

Finally, with respect to risk, this paradigm shift requires moving

away from single study-based binary (safe/unsafe) decision-

making to integrating diverse data across multiple data streams

to reach a probabilistic assessment (Maertens, 2022) across

multiple outcomes across the population. Thus, especially with

the emergence of “big data” along with “big compute,” toxicity

will be evidence-integrated, combining multiple evidence

streams across diverse sources of structured and unstructured

information.

The rest of this report summarizes the discussion from the

workshop relating to research challenges, research opportunities,

and the ultimate trajectory to achieve the vision of a holistic,

predictive toxicology.

Figure 1 The proposed paradigm shift in Toxicology research.

Figure 2 The three workshop topics and the expected long-term impacts.

Enabling characterization of toxicity across

genetics, life stage, and non-chemical stressors

and pathobiology of intermediate states,

perturbations, and outcomes while increasing

accuracy, precision, relevance, and domains

of applicability

Frontiers of

Toxicology

Exposure-

driven

Technology-

enabled

Evidence-

integrated

Driven by real-life exposure scenarios and how

multiple agents work together to affect multiple

mechanistic pathways and health outcomes.

Long-Term Impacts

•Safer Chemicals and Drugs through

in vitro/in silico chemical screening,

in vitro/in silico clinical trials,

identification of intrinsic and

extrinsic susceptibilities

•Precision Health through individual,

personalized preventive

interventions, pharmaceutical and

non-pharmaceutical therapies

•Targeted Public Health Interventions

& Environmental Regulations

addressing population & spatial-temporal

variability in genome, epigenome, and

exposome, as well as their socio-economic

consequences

Integrating across diverse sources of structured

and unstructured information with enhanced

access, management, evaluation, and

communication.

Current Reductionist Paradigm

•One chemical at a time

•One endpoint at a time

•One biological target at a time

•Single genetic background tested

•Straight, linear mechanistic pathways

•Interactions simplified or ignored

•Safe vs. unsafe dichotomy

A New Holistic Paradigm

• Directly addressing interactions among

▪ Chemicals

▪ Non-chemical stressors

▪ Heterogeneous populations

▪ Social determinants of health

▪ Life stages, including developmental

origins of disease

•Mechanisms integrated into the complex

physiological networks

•Quantifying probabilistically the impacts on

incidence and severity of human disease

Human-Relevant

Experiences of

Toxicity

A Proposed Paradigm Shift:

Embracing the Multi-Factorial, Multi-Casual Nature of Toxicity

6

Toxicology Research Challenges

For many decades the discussion of changing toxicological

processes was driven by ethical issues of animal use and the

desire to develop and validate so-called “Alternative Methods.”

In the last two decades, it has become increasingly clear that

there are many more reasons to rethink the toolbox of risk

sciences (Hartung, 2017a), namely:

• Long duration and low throughput do not match testing or

public health needs (Hartung and Rovida, 2009; Meigs, 2018)

• Uncertainty in extrapolating results to humans (NASEM, 1983

[the “Red Book”], 1994, 2009 [Science and Decisions])

• Only single chemical/endpoint at a time; does not account

for multiple exposures and non-chemical exposures,

including social determinants (Jerez and Tsatsakis, 2016;

Bopp, 2019; NASEM, 2009 [Science and Decisions])

• Does not account for inter-individual variability (NAS, 2016)

• Does not incorporate associated socio-economic costs and

benets (Chiu, 2017; Meigs, 2018; NASEM, 2009 [Science

and Decisions])

The ongoing transition in terminology in the eld from

“Alternative Methods” to “New Approach Methods” reects

this broader motivation for change. Tox-21c embraced these

challenges and developed a framework of an essentially

mechanistic toxicology of perturbed pathways combined with

quantitative in vitro-to-in vivo extrapolation to human exposure

(Hartung, 2018). A roadmap of consequential steps was

suggested (Hartung, 2009a; Hartung, 2009b).

Workshop participants discussed these overarching challenges

to predictive toxicology and dened the key challenges for

each area as:

Exposure-driven Toxicology

Populations are exposed to multiple environmental agents,

including chemical agents through air, water, food, soil, and

non-chemical agents such as noise, light, and social stressors

(e.g., racism, socioeconomic deprivation, climate). Therefore,

toxicological research that embraces an exposure-driven

approach, characterizing real-life exposure scenarios, including

exposure mixtures and how these agents work together affecting

multiple mechanistic pathways and health outcomes, is needed. A

key opportunity is the expansion of exposomic approaches (Sille,

2020; Huang, 2018; Escher, 2020) to include this broader landscape

of exposures. The workshop participants highlighted three primary

challenges to achieving a more exposure-driven approach:

• Real-world exposures: understanding the interplay

of environmental and social stressors with genetic and

molecular variants

• Predictive intervention: understanding the contributions

of this research toward the identication and evaluation of

effective interventions

• Targeted populations: the inclusion of the affected

communities through participatory research efforts

There are several reasons why an exposure-driven approach

has not yet been embraced. First, many relevant exposures

are not yet fully characterized as we lack the tools and

technologies needed to characterize these exposures, as well

as to understand the health implications. In addition, there has

not yet been a successful engagement of the key stakeholders,

foremost the populations that are directly affected by these

exposures, that is needed for the success of preventive

interventions. However, there are currently substantial advances

coming in these areas and we can easily anticipate substantial

progress in the years to come.

Technology-enabled Toxicology

Predictive toxicology requires expanding the “toolbox” in

several directions. The workshop participants identied the key

challenges to developing the toolbox as:

• Broader model systems: As adverse outcomes involve

interactions of the environment (see above), genes, and

life stage, we need our “model systems” to cover “gene”

and “life stage” more broadly than currently possible using

traditional animal studies (e.g., typically inbred strains) or

even most current high-throughput testing assays (e.g.,

typically based on genetically homogeneous immortalized

cell lines). Example technologies include genetically

diverse population-based in vitro and in vivo resources, and

expansion of experimental designs to cover different stages

of development, as well as developmental origins of health

and disease.

• Access to the intermediate state: Additionally, our

approaches currently cluster at the beginning (e.g.,

high-throughput assays) and the end (e.g., in vivo apical

endpoints) of the pathophysiological process, neglecting

the modulating and stochastic factors that inuence

outcomes that lie between. Thus, approaches that provide

access to intermediate states, perturbations, and outcomes

are needed to better understand the progression to

disease. Example technologies include novel biomarkers,

microphysiological systems (MPS, encompassing organoid

and organ-on-chip technologies), and in silico models (e.g.,

systems toxicology/virtual experiments, AI/ML).

• Assessment tools: We lack the ability to characterize

the predictive accuracy, precision, and relevance of new

approaches or to understand their domains of applicability.

7

Evidence-integrated Toxicology

Toxicology is currently transitioning from a data-poor to a

data-rich science with the curation of legacy databases, “grey”

information on the internet, mining of scientic literature, sensor

technologies, -omics, robotized testing, high-content imaging,

and others. The workshop participants identied the key

challenges to evidence-integrated toxicology as:

• Information sources: There are no established methods

or consensus on how to handle new types of information

sources (which may be incomplete) or how to weigh

evidence strength, risk of bias, quality scoring, etc., or how

to integrate the evidence streams.

• Validation/Verication: In the case of probabilistic risk

assessment, sources of evidence are already integrated,

resulting in a more holistic probability of risk/hazard, so the

challenge is to determine how to validate real-life, t for

purpose, ground-truthing, qualication, and triangulation,

and communicate these probabilities.

• Data Science: We have not yet adopted best practices

for data curation and storage, data mining, analysis, and

visualization.

8

Toxicology Research Advances and Opportunities

The workshop participants anticipate exciting new research

advances on the path to achieving the vision of a holistic,

predictive toxicology that addresses real-life exposure scenarios,

leverages technological advances, and integrates multiple

evidence streams across diverse sources. This section presents

those advances and opportunities according to the three

workshop themes.

Exposure-driven Toxicology

The risks of developing chronic diseases are attributed to

both genetic and environmental factors, e.g., 40% of 560

diseases studied had a genetic component (Lakhani, 2019)

while 70 to 90% of disease risks are probably due to differences

in environments (Rappaport and Smith, 2010). The Human

Genome has been at the center of medical research for the last

forty years, but not many major diseases can be explained or

treated as a result.

Understanding exposure effects and genome x exposure

(GxE) interactions are thus central to the future of medicine.

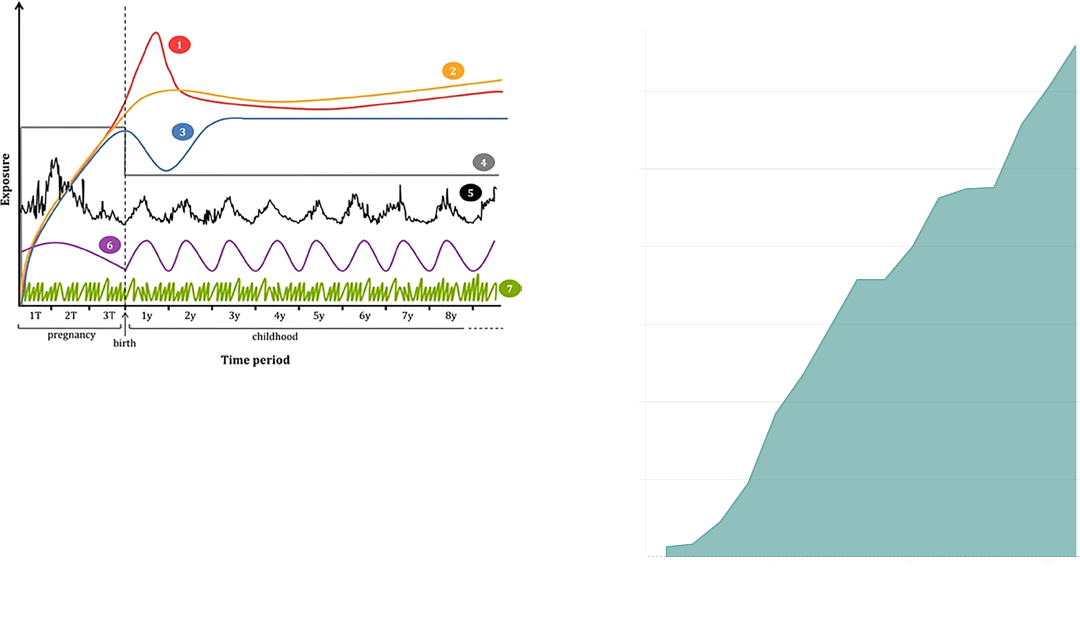

The original concept of the exposome (Wild, 2016; Vermeulen,

2020), encompassing all exposures of an individual over time,

seems to be impractical and unfeasible as a goal (Figure 3).

The National Academies of Sciences report (NRC, 2010) has

even elaborated on this concept. The 180 million synthesized

chemicals, 350,000 of which are registered for marketing in the

19 most developed countries (Wang, 2020) and myriad natural

and breakdown products seem to make it impossible to measure

and study their effects on humans and the environment. Current

approaches in cells or animals can cost from several thousand to

a million dollars per substance and health effect (Meigs, 2018).

Worldwide toxicity testing covers only a few hundred substances

comprehensively and costs about $20 billion per year. In addition,

human and ecological exposure to substances does not occur

in isolation of single substances or in any constant exposure

scheme. To understand it all or at least a lot of it seems like an

impossible mission.

This has led to a hazard-driven approach to toxicology, i.e., an

established hazard is followed up with exposure considerations

to assess risk. The thresholds of toxicological concern (TTC)

(Hartung, 2017b) concept has been introduced to make

pragmatic use of this by establishing the fth percentile of

lowest-observed (LOEL) or no-observed effect levels (NOEL)

and adding a safety factor of 100. This essentially sets a limit

of possible toxicity at one-hundredth of the point of departure

below 95% of relevant chemicals. For instance, there is a

potential role for TTC to abrogate risk assessment where

exposure and/or bioavailability (internal TTC) (Hartung and

Leist, 2008; Partosch, 2015) are negligible (Wambaugh, 2015),

thus showing a path for how substances could be triaged

according to their negligible exposure. However, TTC-type

approaches are still a “one chemical at a time” paradigm, may

not account for exposures varying temporally or across the

population, and do not address potential interactions among

the thousands of substances to which people are constantly

exposed.

In recent years, the concept of the exposome has been

proposed to capture the diversity and range of environmental

exposures (e.g., inorganic and organic chemicals, dietary

constituents, psychosocial stressors, physical factors), as well

as their corresponding biological responses (Vermeulen,

2020). While measuring those exposures throughout the

lifespan is challenging, technology-enabled advances, such

as high-resolution mass spectrometry, network science, and

numerous other tools provide promise that great advances in

the characterization of the exposome are possible. Indeed,

the exposome approach has achieved traction in recent times

because of the availability of -omics technologies (Sille, 2020)

as discussed below. A NIEHS workshop (Dennis, 2017) saw the

following advantages of an exposome approach:

• Agnostic approaches are encouraged for detection of

emerging exposures of concern

• Techniques, and development of techniques promote

identication of unknown/emerging exposures of concern

• Links exogenous exposures to internal biochemical

perturbations

• Many features can be detected (> 10,000) for the cost of a

single traditional biomonitoring analysis.

• Includes biomolecular reaction products (e.g., protein

adducts, DNA adducts) for which traditional biomonitoring

measurements are often lacking or cumbersome

• Requires a small amount of biological specimen (~100 μL or

less) for full-suite analysis

Figure 3 The exposome concept. [Adapted from Vermeulen, 2020].

9

• Enables detection of “features” that are linked to exposure

or disease for further conrmation

• Encourages techniques to capture short-lived chemicals

• Aims to measure biologically meaningful lifetime exposures,

both exogenous and endogenous, of health relevance

A number of research studies have started to apply these targeted

and untargeted technologies to characterize those complex

exposures and how they impact health and disease, and which

relevant pathways are affected. For instance, birth cohort studies

are attempting to characterize those complex and cumulative

exposures during critical windows that are of increased importance

for long-term human health (Figure 4). Those exposures are not

limited to chemical exposures and consider non-chemical stressors

throughout the lifespan. The concept of cumulative exposures is

critical, as some communities are disproportionally exposed to a

cumulation of chemical and non-chemical exposures which over

time can result in adverse health outcomes.

Figure 4 The early life exposome. Examples of relevant exposures and

their exposure patterns during pregnancy and childhood, including

1) persistent organic pollutants (POPs), 2) mercury, lead, 3) arsenic, 4)

secondhand smoke, 5) air pollution, noise, 6) UV radiation, seasonal

exposure to chemicals, 7) non-persistent pollutants. Other exposures

such as psychosocial stressors could follow different exposure patterns.

[Adapted from Robinson, 2015].

These laboratory and exposure sciences advances also require,

in parallel, advancement in biostatistics and data science, to

maximize the information that can be obtained from those

high-dimensional data. For instance, elastic-net regularization

regression is becoming a popular machine learning tool that can

be used to identify the relevant predictors from these complex

sets of exposure data. For instance, these high-dimensional

models were of great relevance to identifying key factors

associated with endogenous intermediate pathways (e.g.,

inammation, protein damage, oxidative stress, and others) in

a pregnancy cohort from Massachusetts called the LIFECODES

cohort (Aung, 2021). These types of cohort studies with complex

exposure data, in diverse populations, prospective follow-up, and

high-quality health outcome data will continue to grow and will

become key tools to advance exposure-driven toxicology.

Technology-enabled Toxicology

The near exponential growth in biotechnology and

bioengineering over the last few decades has created numerous

technologies that could be applied or leveraged in toxicology.

Here we highlight three complementary technology areas that

have the potential to vastly increase the coverage, biological

relevance, and depth of data available for assessing the human

health effects of chemical exposures.

Induced Pluripotent Stem Cell Technologies

The discovery that somatic cells can be reprogrammed

to become pluripotent, recognized by the Nobel Prize in

Physiology or Medicine in 2012, has led to a vast array of

advances in biomedical science, from basic cell biology to

regenerative medicine. Thus, induced pluripotent stem cells

(iPSCs) are among the most substantial research advances of

the 21st century, on par with the sequencing of the human

genome, with thousands of publications per year utilizing this

technology (Figure 5).

Figure 5 Annual growth in publications for “induced pluripotent stem

cells” query in PUBMED, as of July 2022.

In principle, such technologies would enable one to generate

unlimited cells and tissues that retain the genetic information

of the original donor. Cardiomyocytes were one of the rst

functional cell types to be successfully differentiated from iPSCs

and have gone from research lab to commercial application

preclinical safety evaluation of xenobiotics in less than a decade

(Burnett, 2021). They have been found to be useful in identifying

cardiotoxicity hazards for both drugs and environmental

chemicals and are key components of a broad FDA-led initiative

2010 2015 2020

Year of Year

0

500

1000

1500

2000

2500

3000

Count

Annual Pubmed Hits for

"Induced Pluripotent Stem Cells"

10

(CiPA)

3

to address drug-induced arrhythmias, see Figure 6.

However, even for this relatively “mature” technology of

iPSC-derived cardiomyocytes, a number of limitations remain,

including their expressing a more fetal-like phenotype and

challenges in routine and reproducible differentiation from

individual patients. These challenges are even more pronounced

for other cell types, as discussed below.

Nonetheless, the potential for iPSCs to revolutionize biomedical

science overall, and toxicology in particular, is well recognized,

especially when coupled with the rapid development of

advanced in vitro and microphysiological technology.

In vitro and Microphysiological Systems (MPS) Technologies

As discussed in “Toxicology Research Challenges”, there is an

increasing recognition that meeting the needs of toxicology will

require expanding beyond the use of traditional preclinical in

vivo rodent models. These technologies have been termed “New

Approach Methods” (Environmental Protection Agency, European

Chemicals Agency) or "Alternative Methods" (Food and Drug

Administration), and all have the aim of increasing the rigor and

predictivity of toxicity assessments while reducing the reliance

on vertebrate models. Much of the progress in the last 15 years

has been on high-throughput in vitro systems, exemplied by the

Tox21 Consortium

4

, which is a federal collaboration among U.S.

Environmental Protection Agency, National Toxicology Program,

National Center for Advancing Translational Sciences, and the

Food and Drug Administration focusing on “driving the evolution

of Toxicology in the 21st Century by developing methods to

rapidly and efciently evaluate the safety of commercial chemicals,

pesticides, food additives/contaminants, and medical products.”

This effort made use of commercially available assay platforms

across a wide range of targets, testing almost 10,000 compounds.

The screening data generated across a wide diversity of chemicals

and potential mechanisms of toxicity has resulted in hundreds of

publications, with many lessons learned as to the opportunities

and challenges in high-throughput screening data (Richard, 2021).

3 hps://cipaproject.org

4 hps://tox21.gov

5 hps://www.fda.gov/science-research/about-science-research-fda/advancing-alternave-methods-fda

6 hps://mpsworldsummit.com

The workshop participants agreed that more advanced in

vitro technologies, in particular microphysiological systems

(Marx, 2016; Marx, 2020; Roth, 2022), represent the next great

opportunity to advance toxicology (NASEM, 2021). An MPS

model has been dened as one that “uses microscale cell culture

platform for in vitro modeling of functional features of a specic

tissue or organ of human or animal origin by exposing cells

to a microenvironment that mimics the physiological aspects

important for their function or pathophysiological condition.”

5

These may include a wide variety of types of platforms, from

mono-cultures to co-cultures and organoids, and also include

so-called “organ-on-chip” models that include an engineered

physiological micro-environment with functional tissue units

aimed at modeling organ-level responses. These “chip” models

consist of four key components:

• microuidics to deliver target cells, culture uid, waste

discharge

• living cell tissues in either 2D or 3D, including

scaffolding, physical, or chemical signals to simulate the

microenvironment physiologically

• a system for delivering the drug or chemical, either through

the same as the microuidics delivering culture uid, or via a

separate channel (e.g., air-liquid interface)

• a sensing component that may be embedded (e.g.,

electrodes), visual (via transparent materials), or assayed

from efuent

The mushrooming of MPS models has been fueled by stem

cell technologies, 3D cultures (Alepee, 2014), microuidics

(Bhatia and Ingber, 2014), sensor technologies (Clarke, 2021),

bioprinting (Fetah, 2019) and others. Figure 7 shows different

ways of producing 3D cultures, which are key to creating organ

architecture and functionality as key features of MPS. Notably, the

MPS eld has most recently started to organize itself by annual

global meetings and an International MPS Society.

6

2006 2007 2011 2016 2017 2018 2019

•Generation of iPSCs

from mouse fibroblasts

•Use of patient-specific iPSC-

CMs for disease modeling

•Use of iPSC-CMs for drug

toxicity testing

•Generation of iPSCs from human fibroblasts

•Generation of IPSC-derived

CMs from human fibroblasts

•Use of iPSC-CMs from

patients to recapitulate

clinical toxicity of a drug

•Use of iPSC-CMs for

non-drug toxicity testing

•Use of a healthy population of

iPSC-CMs for drug toxicity testing

•Use of a healthy population of

iPSC-CMs for environmental

chemical toxicity testing

Figure 6 Developmental timeline of induced pluripotent stem cells derived cardiomyocytes (iPSC-CMs) for toxicity testing. [Source: Burnett, 2021]

11

Figure 7 Ways to generate 3D cultures.

MPS models have been developed for nearly every human organ,

and several have been linked together into multi-organ platforms

(Hargrove-Grimes, 2021) (Figure 8).

Figure 8 “Man-on-a-chip.” [Source: Materne, et al., 2013]

Moreover, applications have been reported in drug development,

disease modeling, personalized medicine, and assessment of

environmental toxicants. However, many translational challenges

remain that hinder the application of MPS in toxicology

(Andersen, 2014; Watson, 2017; Nitsche, 2022). Efforts continue

to improve external validation, reproducibility, and quality

control and to enable technology transfer. Noteworthy, Good

Cell and Tissue Culture Practice (GCCP 2.0, Pamies et al., 2022)

has expanded these standards to MPS. Overall, the throughput

remains low, and the cost remains high, hampering broader

application of these technologies, particularly as benchmarking

against simpler in vitro systems has not always revealed

sufcient improvements to warrant the additional time, cost, and

complexity. Nonetheless, emerging efforts to dene appropriate

“context of use” cases for MPS are promising through the

continued interactions among researchers, regulators, and the

private sector (Hargrove-Grimes, 2021; NAS, 2021).

Imaging and Other High-content Measurement Technologies

The high complexity of MPS and consequential lower throughput

make them an ideal match to high-content measurement

technologies, which provide through a comprehensive analysis

of the biological system maximum insight into the Adverse

Outcome Pathway (AOP) in play.

High-content imaging (HCI) combines automated microscopy

with image analysis approaches to simultaneously quantify

multiple phenotypic and/or functional parameters in biological

systems. The technology has become an important tool in the

elds of toxicological sciences and drug discovery because it

can be used for mode-of-action identication, determination

of hazard potency, and the discovery of toxicity targets and

biomarkers (van Vliet, 2014). In contrast to conventional

biochemical endpoints, HCI provides insight into the spatial

distribution and dynamics of responses in biological systems.

This allows the identication of signaling pathways underlying

cell defense, adaptation, toxicity, and death. Therefore, high

content imaging is considered a promising technology to

address the challenges for the Tox-21c approach. Currently, HCI

technologies are frequently applied in academia for mechanistic

toxicity studies and in pharmaceutical industry for the ranking

and selection of lead drug compounds or to identify/conrm

mechanisms underlying effects observed in vivo.

Several ~omics technologies such as genomics, transcriptomics,

proteomics, metabolomics, lipidomics etc. represent further high-

content technologies allowing deep phenotypic characterization

and mechanistic analysis. Hartung and McBride (2011) suggested

earlier the use of combined orthogonal ~omics technologies to

map pathways of toxicity (PoT) (Kleensang, 2014). Noteworthy,

the PoT concept is reminiscent of the AOP approach, which were

both proposed independently in 2011. However, there are some

fundamental differences (Hartung, 2017c): AOP are designed by

experts largely based on their understanding and review of the

literature; they are for this reason very much biased by current

knowledge/belief and typically not quantitative and difcult to

validate experimentally. AOP are narrative, low level of detail,

and largely a linear series of events. PoT, in contrast, are deduced

from experimental data, especially pathway analysis from

untargeted ~omics technologies. PoT are dened on molecular

level with high level of detail, integrating emerging information,

mainly describing network perturbation. They can be studied

further by interventions in the experimental system and often

allow quantitative description. This process is not free of biases

either and the most promising combination of different omics

technologies is still early in development.

Brain Slices

Re-Aggregating Cultures

Colonization of Scaffold

Layered Co-Culture

Hanging Drop

Transwell Culture

Bioprinting

12

Evidence-integrated Toxicology

Toxicology is at the intersection of application and basic science

serving as an integrator of health sciences and public health. While

this is a very powerful position, the dominance of the regulatory

perspective constrains stakeholders. Traditionally, regulation needs

predictions regarding single chemicals, but as a consequence,

risk assessors are stuck in a system where they are tackling one

chemical at a time. Because this is how toxicologists are trained

and how regulatory requirements are formulated, toxicology has

been shaped into a “one-chemical-at-a-time” science. The entire

ecosystem of regulators, the public, and private industry have

ended up focusing on understanding the impacts of each specic

chemical on health or environmental outcomes, even leading

to the creation of trade associations devoted solely to a single

chemical. Breaking out of this paradigm, at minimum, requires

that toxicologists share the data they collect so it can be assessed

and integrated with other data to create a more holistic view of

a chemical’s risk prole. If a risk assessor choses a new tool, its

integration requires broader discussion with regulators and often

regulations must ultimately be updated. Ideally, this discussion and

any information on the tool is public where others can comment

on it. Since there is no centralized effort to do so currently, data

sharing falls to the individual toxicologist and is not necessarily a

common practice. True change has to come from moving public

understanding and regulatory requirements with the eld as one;

which is very difcult to do at the same time.

Big Data

Eighty-four percent of all data in the world has been produced

in the last six years. The scientic literature on the interaction of

humans alone is enormous. For illustration: PubMed is estimated

to cover 25% of biomedical literature. This database includes

about one million new articles per year, of which ~100,000 describe

exposures and ~800,000 include some effects of a substance

on a biological system. Grey literature, such as the internet,

databases of legacy data, -omics technologies, robotized testing,

sensor technologies, image analysis etc. continuously add to

this knowledge base. A critical challenge is in sharing of these

data, which has been a notorious problem in toxicology. Often

information is only in the possession of companies and shared with

regulators in condence, if at all. Not only do we have to overcome

these hurdles, but we also need to establish data collection and/or

meta data standards. This refers in essence to the FAIR principles,

i.e., to make data available in a way that others can use them.

Toxicology is thus currently moving from a data-poor to a data-

rich science, though too many things are still siloed. Raw data

is often behind paywalls or regulatory walls, which can include

being shielded from the public with claims that it contains

condential business information. Consequently, we only see the

tip of the iceberg and data is often not accessible.

Adding to this, no ontologies or metadata allowing people to

make use of each other’s data are available. We generate a lot

of it every day, and generally do not know how to integrate it

unless it is highly curated. Data can be structured by chemical

identity. With more and more data available, the eld of

toxicology becomes dynamic and needs consistent support, e.g.,

to host a central database online. Such a tool needs agreement

regarding how to take data from across datasets. Data needs to

be shareable and usable for machine learning. Ideally, a real-

time assessment would be implemented based on monitoring (for

example integrating data via application programming interfaces

(API)), but such broader integration is hindered by various levels of

technology used by and available in practice. A possible steward,

semantics, standards, and denition of the level of information

needed for human prediction are required. Initially, the focus might

be on narrow chemical spaces with many studies/replicates.

A central problem of safety assessments is how to dene

something as safe. Typically, we have enough data to say

something is toxic, but when do we know enough to say it is

safe? The absence of evidence is no evidence of absence; i.e., a

lack of evident toxicity does not mean that it could not manifest

under different circumstances that are not adequately covered

in the test systems. This calls, on the one hand, for post-

marketing surveillance as done for drugs after market entry, or

more generally for alertness towards consumer feedback and

new scientic ndings.

Systematic Review Methods

A central problem of toxicology is evidence integration (enabling

integration of diverse, cross-disciplinary sources of information)

as more and more methodologies and results, some conicting

and others difcult to compare, are accumulating. This is a

challenge faced in more and more risk assessments, but also in

many systematic review methods that need to combine different

evidence streams (NASEM, 2011, 2017b, 2021; Woodruff and

Sutton, 2014; Samet, 2020; EPA, 2020; EFSA and EBTC, 2018).

Evidence integration is needed on very different levels of data,

studies and to other stressors, as well as across evidence streams.

The central opportunities are in quality assessment and AI,

especially natural language processing (NLP). There needs to

be a common platform, especially on the data side for dynamic

modeling (“dynamic data requires dynamic models”), sharing,

quality control, hardware and software, standards, metadata,

automated annotation, continuous adaptation to AI progress

(e.g. explainable AI), role model evidence-based medicine,

composition of test strategies, validation, and probabilistic risk

assessment. This collaborative open platform to transparently

collect, process, share, and interpret data, information and

knowledge on chemical and non-chemical stressors will enable

real-time and rapid evidence integration, empowering all

steps of protection of human health and the environment.

The combination of tests and other assessment methods

in integrated testing strategies (Hartung, 2013; Tollefsen,

2014; Rovida, 2015), a.k.a. IATA or DA by the Organisation for

Economic Co-operation and Development, needed to integrate

different types of evidence.

Role of Machine Learning and Articial Intelligence

We need evidence integration on the levels of data, information,

knowledge, and ultimately action. A system for integrating across

different levels of information that are each integrated within

13

their own space, requires broad integration of quality information

with the proper infrastructure to support this. The vision is to

create an infrastructure with harmonized agreement on the

levels of information and for what they may be best suited. For

this and its broad use, more toxicologists with computational

skills are needed. We also need common vocabularies across

different levels of information, data architectural standards for

release and utilization, real-time integration (e.g., through APIs),

and annotation at different levels. Such annotation requires

the connection of raw data to study metadata and the use of

language that a computer can digest by NLP through ontologies,

standardized “controlled” vocabularies, harmonized templates

such as IUCLID (https://iuclid6.echa.europa.eu/), and a library of

synonyms. A major question is what can be done to make sure

data is encoded/tagged to make it useful? High-quality training

sets for annotation to build knowledge graphs, causal networks,

etc. need to be developed. However, the use of annotation/

structured databases is an old way of looking at things. It is

almost impossible to get people to conform to data annotation

guidelines, so instead the eld will need to embrace methods to

manage unstructured data.

We might rather spend energy on building better NLP and deep

learning technologies to analyze unstructured data. The enormous

progress on NLP in recent years means that we are moving very

close to surpassing the Turing Test, if we have not already. The

Turing Test is a deceptively simple method of determining whether

a machine can demonstrate human intelligence. If a machine can

engage in a conversation with a human without being detected as

a machine, it has demonstrated human intelligence. Over the last

two years, enormously large models have been trained (Hoffmann,

2022). They use 140 to 530 billion parameters and 170 billion to 1.4

trillion training tokens. Some of these models claim to have been

trained on the entire Internet. They can respond in real-time to

questions with high accuracy, write articles indistinguishable from

those by human authors and even write code for computers. The

rst impact of the NLP breakthrough is that human knowledge

becomes machine-readable. Our vision is that this enables the

creation of similar models to virtually grasp the interaction of

organisms with chemical substances.

Toxicologists can read and extract information better, but

a computer can do this faster on many more sources. We

now need to train computers to be as good as humans in

interpreting data. AI is the best tool for evidence integration,

and evidence integration must become the standard for risk

and safety assessments. The big question is: how are people

going to use this information generated by AI? Here we need

to separate our vision from its implementation. Toxicological

research areas and associated S&T advances can overcome

hurdles to enable toxicology as a predictive science via evidence

integration. From the explosion in the use of machine Learning

and data science, the emerging use of NLP, knowledge graphs,

and next-generation-omics analytics we need to move to

explainable AI, embrace reinforcement learning and modern

database management. The platform to be established will

7 hps://www.ebtox.org

need an IT architecture, hardware and software, continuous

deployment/support, decision support tools, expert systems etc.

Evidence-based methodologies as furthered by evidence-based

toxicology

7

(e.g., systematic review principles, risk of bias, meta-

analysis, quality scoring, probabilistic approaches) can serve as

role models for objective and transparent handling of evidence.

Besides making sense of evidence pieces, such a platform can

also guide the composition and validation of Testing Strategies

(IATAs, DAs, AOP networks) and extraction of human relevant

reference datasets.

Probabilistic Approaches

Recognizing that as science delivers only probability rather

than absolutes, probabilistic tools lend themselves to all of

these (Maertens, 2022; Chiu and Paoli, 2020), will enable us to

move away from black/white, toxic/non-toxic dichotomies, as

well as better support life cycle and socioeconomic analyses

that require evaluation of incremental benets or risks rather

than “bright line” evaluations (NASEM, 2009; Chiu, 2017;

Fantke, 2018, 2021). Substantial progress on developing and

implementing probabilistic risk assessment approaches has

been made in the last 10 years (Chiu and Slob, 2015; Chiu,

2018), with the publication of guidance from the WHO/IPCS

(World Health Organization & International Programme on

Chemical Safety, 2018). Conceptually, this involves replacing

the xed values currently used for both the initial 'point

of departure” dose, as well as the “uncertainty factors”

with distributions that reect the state of the scientic

understanding, incorporating and combining uncertainties

quantitatively through statistical approaches (see Figure 9

for example applied to the Reference Dose). Several case

studies illustrating the broad application of probabilistic

approaches have been demonstrated (Blessinger, 2020; Chiu,

2018; Kvasnicka, 2019). Moreover, this conceptual approach

to deriving toxicity values probabilistically can be extended

to non-animal studies (Chiu and Paoli, 2020), as well as to

incorporating population variability through genetically diverse

models described above (Chiu and Rusyn, 2018; Rusyn, 2022).

In this way, probabilistic approaches provide a framework that

facilitates integration across different data types and sources.

14

Point of

Departure

Divide

by 10

Divide

by 10

Reference

Dose

Traditional Approach

POD

POD

10

POD

100

RfD =

Test System

(e.g., experimental animal, in vitro assay)

Inter-Species or

IVIVE Adjustment

Intra-Species

Variability

“Typical” Member of

Human Population

Common Conceptual Model

“Sensitive” Member of

Human Population

Dose or Concentration Distribution with

Effect Size M in Test System

Inter-Species or

IVIVE Distribution

Median to I

th

Percentile

Distribution

Dose Distribution with

Effect Size M in Median Human

Probabilistic Approach

Dose Distribution with

Effect Size M in I

th

Percentile Human

Reference Dose (RfD):

An estimate (with uncertainty spanning perhaps an order of

magnitude) of a daily oral exposure to the

human population

(Including sensitive subgroups

) that is likely to be without

an appreciable risk

of deleterious effects during a lifetime.

Probabilistic RfD (PrRfD):

A statistical lower confidence limit on the human dose

that at which a fraction I of the population shows an effect

of magnitude (or severity) M or greater (for the critical

effect considered).

Figure 9 Illustration of the transition from deterministic to probabilistic approaches when deriving reference doses from toxicity data. The

“Traditional Approach” refers to the practice attributed to Lehman and Fitzhugh (1954) to derive a "safe dose” by taking the dose level without

signicant effects in an animal study (a “point of departure”) and dividing by a “safety factor” of 100. The “Common Conceptual Model” is an

abstraction of this this procedure, whereby information from a test system (whether animal study or other type of data) is rst adjusted to the

“typical” in vivo human, and then adjusted to account for human variability in susceptibility, thereby deriving dose level that is protective of

“sensitive” members of the human population. The “Probabilistic Approach” further incorporates quantitative uncertainty and variability into this

conceptual model, using probability distributions at each step instead of single numbers, so that the result is a distribution (reecting incomplete

knowledge) for the dose that would cause on effect of magnitude “M” in the “I”th most sensitive percentile of the human population. [Adapted

from World Health Organization & International Programme on Chemical Safety., 2018]

15

Toxicology Research Trajectory

Recognizing the broad research advances and opportunities that

have arisen in the last 15 years since the 2007 Tox-21c report,

the workshop participants outlined their vision for the future

research trajectory needed to fulll the promise of transforming

toxicology into an exposure-driven, technology-enabled,

evidence-integrated eld that can better address population and

precision health while ensuring safe pharmaceuticals and a safer

environment. For each of the three research areas, participants

delineated a 5-, 10-, and 20-year plan for building capabilities

that would facilitate this transformation.

Exposure-driven Toxicology

Workshop participants identied several major areas of research

focus to advance exposure driven-toxicology in the coming

decades: 1) real-world-based exposure designs, 2) population-

scale measurements, 3) strategies to ask the right questions, and

4) consideration of ethical and policy implications.

Real-world-based Exposure Designs

Developments in this area are needed to allow for better in

silico, cellular, organoids, model organisms, as well as full

populations-based longitudinal studies. These developments will

allow studies to be conducted in a way that supports prediction

of environmental transport and fate (including chemical

transformations, inter-species comparisons, the application of

the understanding of exposure levels and exposure mixtures)

relevant to the population and its sub-groups. They will also

allow us to apply that knowledge to the experimental setting.

By using this real-world-based exposure design, the results

of different approaches to answering similar questions will be

easier to compare, and make it easier to utilize triangulation as a

key strategy for assessing the health effect and relevant toxicity

pathways of chemical and non-chemical exposures.

Population-scale Measurements

To understand the relevant exposures that lead to disease in

general and specic populations, additional efforts are needed to

develop biobanks (including biological specimens) and ecobanks

(including environmental samples) that inform on the distribution

of thousands of chemicals and non-chemical stressors in relevant

populations. Factors of interest include relevant exposure

scenarios, sociodemographic conditions, and relevant disease or

health status. Beyond human populations, the inclusion of animals

and the ecosystem for real-world exposure assessment is of

relevance to human environmental health, as well as environmental

health and toxicology, more broadly. Recent studies, for instance,

have shown that exposure assessment efforts in companion

animals, such as cats using non-invasive silicon tags, can contribute

to the assessment of ame retardants in homes, and their potential

role in feline hyperthyroidism (Poutasse, 2019).

Ask the Right Questions

One of the complexities in the current eld of omics technology

is how to prioritize the right questions in a way that leads

to the correct computational approach. For instance, the

question might be related to the total mixture, or to specic

components of a mixture. Thinking strategically and with the

right stakeholders (community, policymakers, interdisciplinary

scientists), will contribute to developing those right questions

in ways that are most useful for society and respecting

privacy concerns that many have regarding the unintended

consequences of data sharing.

Ethical and Policy Implications

An important amount of the workshop discussion focused

on aspects related to the ethical and policy implications of

toxicological research including the disproportionate burden

of exposures affecting disadvantaged communities. Groups

discussed the need for research to address those concerns by

incorporating elements of community engagement, citizen

support and environmental justice that must keep pace with

the technology.

Anticipated Capabilities

Regarding the key anticipated capabilities for exposure-driven

toxicology, the workshop participants anticipated the following

achievements as shown in Table 1 and described here:

At 5 years:

• Scale-up technology for high quality inexpensive assessment

of 1000-5000 chemicals that can be tagged to exogenous

exposures including non-chemical stressors. Technology is

currently slow and throughput is not high enough, which

makes exposomic approaches expensive.

• Develop libraries that tag key information for those chemicals

(meta data layering) to ensure their interpretation. There is

currently a lack of validation for many chemical signatures that

can be identied with untargeted technologies as to their

prediction of health effects.

• These technology problems can be solved through effort

and investment, similar to the genome project.

At 10 years:

• The availability of scalable technology for exposomics to

achieve high throughput, that is also cheap, sensitive, and

specic will allow us to apply this exposure-based approach

to longitudinal studies and biobanks.

• Studies that can be both retrospective and prospective

ensuring “FAIR” ness and linking exposome with health

outcomes.

• Retrospective studies will allow us to go back decades and

leverage biobanks. At the same time, we will be able to plan new

prospective studies to evaluate the exposures of the future.

20 years:

• Exposome-disease prediction will integrate detailed exposure-

based information with health outcome data in large scale

and numerous populations. We will achieve a great level of

precision in disease prediction that will be environment-based

and can also leverage gene-environment interactions.

• This knowledge will provide us with new forms of exposome

targeted prevention and treatment.

16

Table 1: Timeline for Key Exposure-driven Toxicology Developments

Key Capability Near-term (5-yr) goal Mid-term (10-yr) goal Long-term (20-yr) goal

Analytical

chemistry

Exposome assays (1000—

5000k/person)

High throughput exposome

assays (10,000/person)

Exposome disease prediction

Metabolomics,

toxicology

Reference exposome library

(meta-data layering)

Organ specic disease associated

Exposome targeted

treatment and prevention

Epidemiology,

clinical research

Disease associated metabolites

Retrospective and prospective

studies ensuring “FAIR” and linking

exposome with health outcomes

Exposome targeted treatment

Technology-enabled Toxicology

Workshop participants anticipate new technological capabilities

in two key research areas to fulll the promise of transforming

toxicology (see Table 2). These include biological capabilities

to provide a diversity of cells and tissues and bioengineering

capabilities to develop relevant and reproducible assays.

Moreover, in each, a set of supporting computational capabilities

will need to be developed.

Biological Capabilities

The critical path for biological capabilities lies in the

understanding of heterogeneity and susceptibility throughout

the life course at multiple scales from cells to the whole

organism. As genetics have turned out to be a much smaller

factor in outcomes than originally anticipated, there is a